As is customary for any hardware, we had to test our new drives in one of our latest deployments, especially how they are going to behave w.r.t. write caching. This specific deployment had RAID controllers set in JBOD mode, exposing all drives directly to the operating system, which we use as bluestore ceph osds.

This was a great opportunity to explore the reliability of bluestore as well as the new drives and the whole (i might add, a bit weird) system. And the work has really paid off as we’re going to mention in this post, but let’s start with basics.

Standard way of write caching

If you’re using your drives in the most standard way, i.e. putting a file system on top and writing to your files (e.g a database), this is a rough view of the road that your data travels.

- After the application

write()s the file, given data goes to its socket buffer first. - Then application calls

flush()on the buffer, the data reaches the page cache, which is basically a piece of volatile memory. - Then someone signals the filesystem, to send the data to the actual drive.

- Drives generally employ some form of write caching, which basically means drive holds this data in its volatile memory.

- Then it moves the data to its persistent memory: a magnetic disk, a NAND cell or something similar.

This, again, is a very rough description. In reality there are levels and levels of coordination between these steps, but for this post we’re keeping things simple and say we have 3 places where our data can be lost pretty easily after a power outage:

- File Buffer

- OS page cache

- Drive write cache

If you have some form of a hardware RAID controller, it’s possible that it also

has some amount of memory to be used as cache, and that’s the 4th place where

your written data can be lost. Devices with this type of cache usually also have

a battery to protect that data though.

In short, over the years, engineers thought of a lot of ways to delay the writes to a persistent place, and a whole lot of other ways to keep this data from being lost on an unexpected power loss.

Going off tracks

In comparison to the standard way of writing explained above, the deployment in question today differs in the infrastructure handling the drives. Applications are the same, they still meddle with files in a standard XFS file system, but the block device this file-system runs on is a RBD: rados block device. This is the ceph’s block storage solution, meaning there’s a piece of software, acting as a block device driver, but instead of writing to a single hard drive, it uses objects in the ceph cluster, which then uses the drives. Think of it as a hardware RAID controller, with a lot more sophistication built into it.

Unfortunately, the amount of software sophistication doesn’t really matter

in the face of an unexpected power loss. In the end, you need to know which data

is safe and which might have been lost. To emphasize: sometimes, it might be ok

to lose data. But the systems we built on top of this possibility, must know

that fact beforehand. Simply put, an application should be aware that the data

can still be lost after calling write(). But after a sync() returns, it can

assume that the data is safe.

So if what you have inplace of “a single drive and a file system” is something like:

- Some number of drives

- Behind RAID Controllers

- But in JBOD mode, so you’re using the drives directly (??!)

- Ceph bluestore using the drives in raw form, so no filesystem

You want to test how safe your data is.

Evolutionary fear of caching

In the standard “single drive and a filesystem” case, data being lost in the drive write cache is a bit of a history indeed. Filesystems and device drivers in the kernel already made the effort of coordinating this stuff so normally no one worries about it. Except the kernel developers.

In the RAID controller case, it’s not that clear. If it employs some sort of

write caching, it means your sync() calls are generally handled by that

without hitting any drives. It’s now the controllers responsibility to ensure

that the data is safe within the drives. If the controller is unaware that

the drives are also caching, it might think it already persisted some data in

the hard drive whereas it can still be lost. And if it can be lost, it

will be lost.

So the general suggestion in these scenarios is to disable the individual drives’ write caches, so you only have RAID controller cache, and it can be sure when it writes data to a physical drive it’s written for good. A fine solution considering you already have a write cache protected by a battery.

Add the fact that we’re running ceph bluestore instead of a filesystem, and you can see why we wanted to be sure if we’d be somehow affected by and drive write cache.

The rubber, please meet the road

Initial testing was pretty uneventful. Just as it was expected, some clever engineers already thought about that and made sure we don’t lose data even while drives are caching our writes. Hat tip to the people working on the firmware for those drives, if only I could reference your source code too.

But since we’re already in our labcoats, why stop now right? Next obvious step is to disable the write cache anyway, just to see the amount of performance impact. Because when the drive doesn’t use any cache, all written data have to go directly to the disk, causing more seeks and more latency which, according to some, could be substantial.

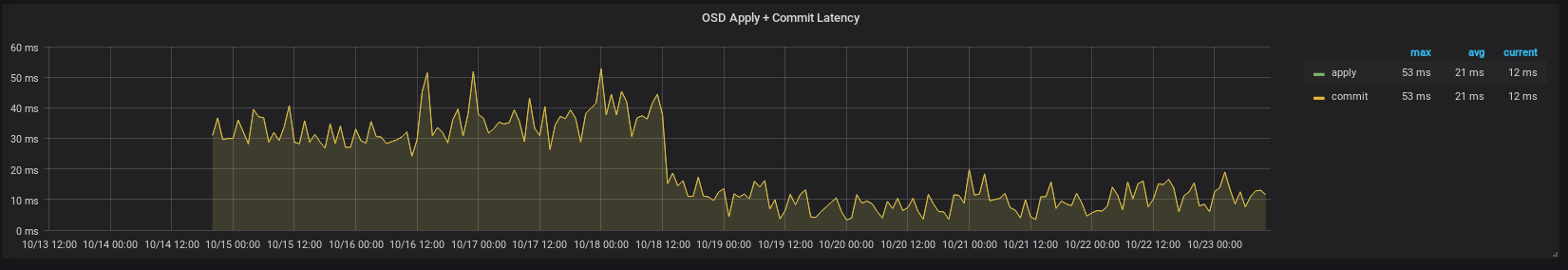

Without further ado, this is what happened:

No, we didn’t do the test in reverse. Around the middle of this chart is where

we disabled the write caches for all the drives. Average latency was around

30ms while we had drive caches enabled and around 10ms when we didn’t.

That’s about a 3x increase in overall performance. Totally unexpected! We like

it when test results are unexpected, it means we’re about to learn a new thing

or two.

Further digging led us to the piece that made the difference. Collecting data

from the osd

timer

around fdatasync, where osd flushes writes to the drive, we recorded the

latency numbers and note down some statistics while the drive cache was enabled

and then disabled:

| Latency in secs | Cache Enabled | Cache Disabled |

|---|---|---|

| mean | 0.021139 | 0.000395 |

| std | 0.023446 | 0.001101 |

| min | 0.000152 | 0.000003 |

| p25 | 0.008029 | 0.000134 |

| p50 | 0.009806 | 0.000203 |

| p75 | 0.025923 | 0.000280 |

| max | 0.126596 | 0.010060 |

As you can see, this is where we shed 20ms off our average latency. Meaning

the drive returns our sync requests almost immediately now. But why?

Into the unknown

To be honest, it’s a bit hard for me to navigate within the kernel source after that. So I had to take a few steps back and start with a simpler question: are there any hints about any write cache behaviour in any of the information about these drives. And apparently there is a mention of an advanced write caching, but just that. No more details. And you know, more often than not, that means some marketing department decided to add a prefix on a usual feature and what they came up with this time was “advanced”.

I couldn’t have been wrong. This was a rare time where the feature was being undersold. To the ignorant folks like me at least. What they did was to place a non-volatile NAND, essentially a SSD, behind the usual DRAM cache; and moving the data in cache to there after a power failure. Yes, after a power failure. How do you move data without power? You use the kinetic energy in the disk motor, which, as we all know from the going down sound, keeps spinning for a few more seconds after cutting power.

Earlier in this post, I said:

engineers thought of a lot of ways to delay the writes to a persistent place, and a whole lot of other ways to keep this data from being lost on an unexpected power loss.

but this is by far the most complex and ingenious way I have ever seen. It’s just out there. And they have more. If you’re interested, please go ahead and read all about it in their paper. I guarantee it’s worth your time.

Finally, the only thing you need to do to take advantage of that is to disable the write cache.

sdparm --clear WCE --save /dev/sda

See section 4.5.3 in this document.