We all have a few servers in our offices, or a colocation provider or in a part of a data center. And a few more every year is not much of a problem. Unpack, deploy, plug, install, reboot and that’s it. Still a few more steps than what you’d need in a cloud environment but nothing an experienced technician can’t handle. Things change when numbers are around some hundreds though.

The obvious differences are the required power, floor, man hours, money etc. similar to the differences of building a shed versus a whole block. But another and somewhat lesser known difference is the uncertainty. And this post is about a specific type of one: making sure the hardware you unpack is the one you ordered and works as you expect it to work.

It’s easy to do this for a single system or two. Deploy the OS if it isn’t already there and just see what it tells you. For a few hundred you can do the same over and over, only to wonder if the 257th system really had a CPU failure or you made a mistake just before lunch. There’s a better way though.

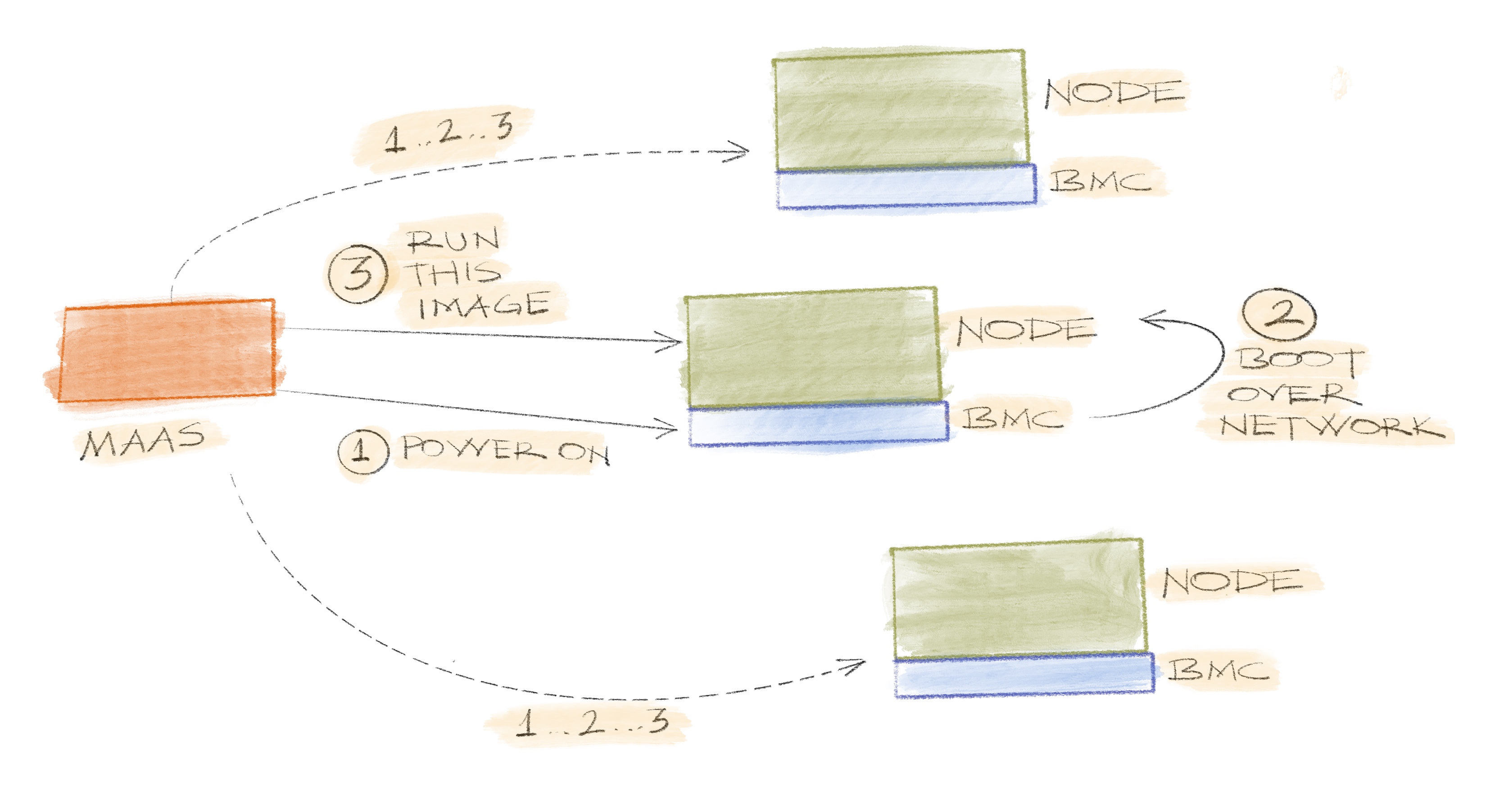

Nowadays almost all of the server systems have some sort of a BMC. Every vendor has a different name for it, but basically it’s a small device to monitor and control the hardware over the network. It’s possible to power the machine up/down, check fan status, specify boot target etc. Combine that with booting over the network and you no longer have to be around the machine.

Maas helps deploy the operating systems in numbers, and it can also help test and verify the hardware beforehand. After the first discovery, it will boot the machine over the network, run some scripts to identify the components and store the discovered details. That’s the time to cross check the order with what is there. Maas shows the details in its web ui but for the environments where doing it one-by-one will take a lot of time, it also has an API and a python client package.

During this identification run, which Maas calls commissioning, it’s also possible to run some tests on the hardware to find the factory defects. By default these tests may include S.M.A.R.T. conveyance, stressing the CPU or memory. And when the systems have custom components like RAID controllers or GPUs it’s possible to prepare scripts for whatever’s necessary and have Maas collect the results too.

Finally we sometimes use this functionality to configure some components. For example if you need to create a RAID array on a MegaRAID device, what you can do is upload a script like the following to Maas and have it run during commissioning.

#!/bin/bash

# --- Start MAAS 1.0 script metadata ---

# name: 00-create-raid5

# title: Create raid5

# description: Configures a RAID5 using first 4 drives in the controller

# script_type: commissioning

# packages:

# url: http://some-accessible-http-server/storcli_xxx_all.deb

# parallel: disabled

# --- End MAAS 1.0 script metadata ---

sudo /opt/MegaRAID/storcli/storcli64 /cAll add vd type=r5 drives=0:0-3

A small gimmick is that there’s a default set of scripts and Maas runs

everything in alphabetical order. So if you want the default commissioning

scripts to identify your newly created device you have to make sure that this

one runs before them. A simple solution is to prefix your scripts with numbers

like 00-, 10-.

Now just with a few clicks over the web ui. You’ve just identified, tested and configured some hundred nodes within hours instead of weeks. For thousands or tens of thousands you’re going to hit some barriers but that’s all for this post.