Bitter sweet data compression with ceph

Almost all data storage solutions have some sort of compression capability. It’s being told as a killer feature from time to time, reducing storage costs substantially, even moving some projects into feasible realms. In our experience though, the data was always compressed beforehand. Because, compressing was and is always the obvious first step, not only before storing the data, but also before moving it around, sending it to the first client, etc.

Exploring the effects of jumbo frames

If you’ve planned your network before starting to run your distributed ceph storage, you’ve most certainly come across the advice to utilize jumbo frames. If you haven’t planned your network before starting to run your distributed system, you’re pretty much screwed. And when you realize you’re screwed (but not before), you start to look for advice on what to do with your massive investment and again you find the advice to utilize jumbo frames.

Costs and benefits of erasure coding - In style

In the last post we left our discussion where we somehow needed to improve the recovery performance in our largish cluster but we didn’t really know what to expect or improve. So in this post we evaluate some alternatives and run some tests to see what happens when we lose a drive. But first we start by reading through the docs a bit.

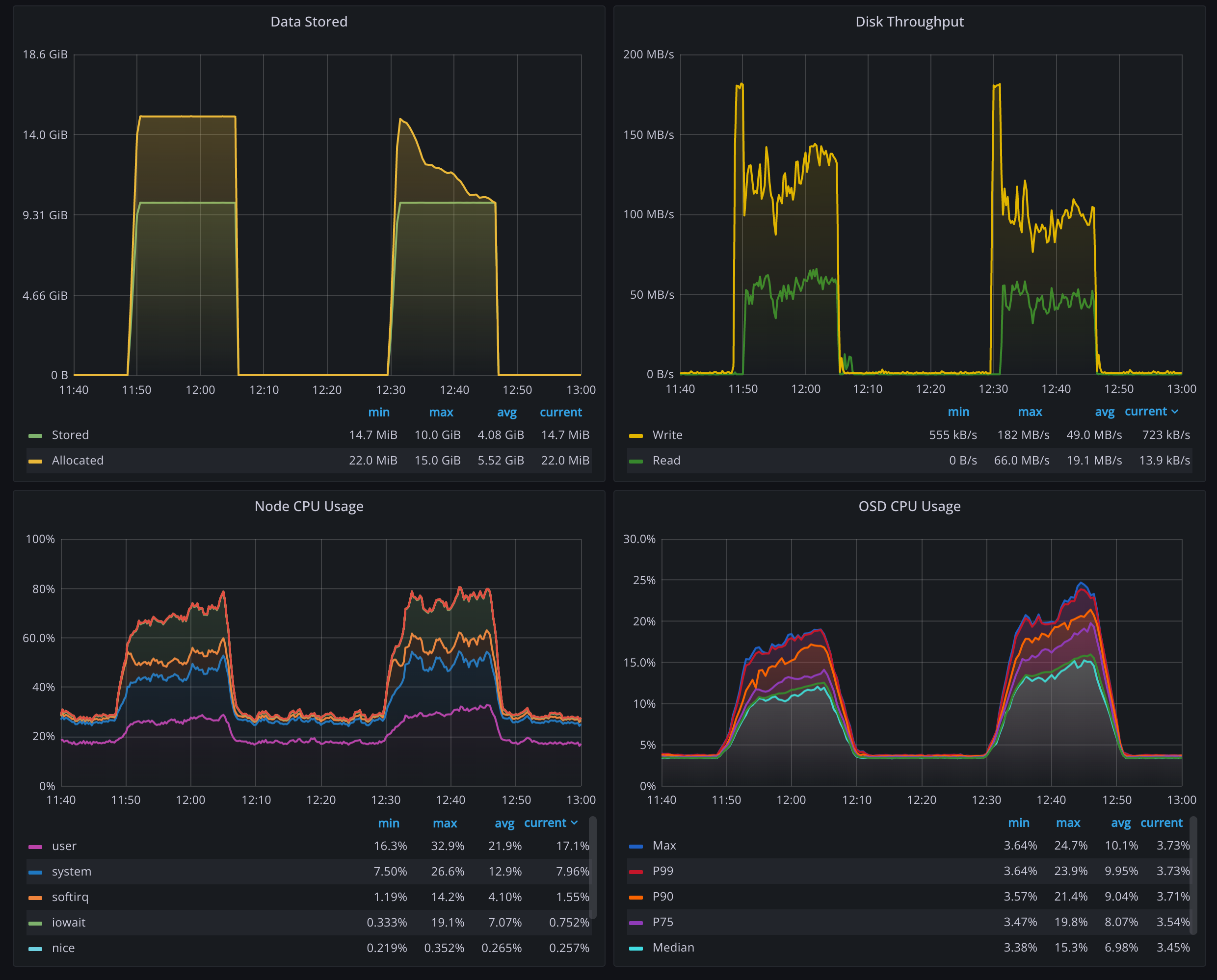

Costs and benefits of erasure coding - In numbers

Last time we talked about different levels of complexity and why engineers complicate things they complicate. And the short answer was: it’s easier that way. Not because they love to complicate things, or to prove that they can work complex things better than everyone else, but because it’s easier. It’s easier to complicate large and costly problems to make them cheaper, than trying to find massive amounts of funding needed to solve them in a simpler way. Simple is expensive.

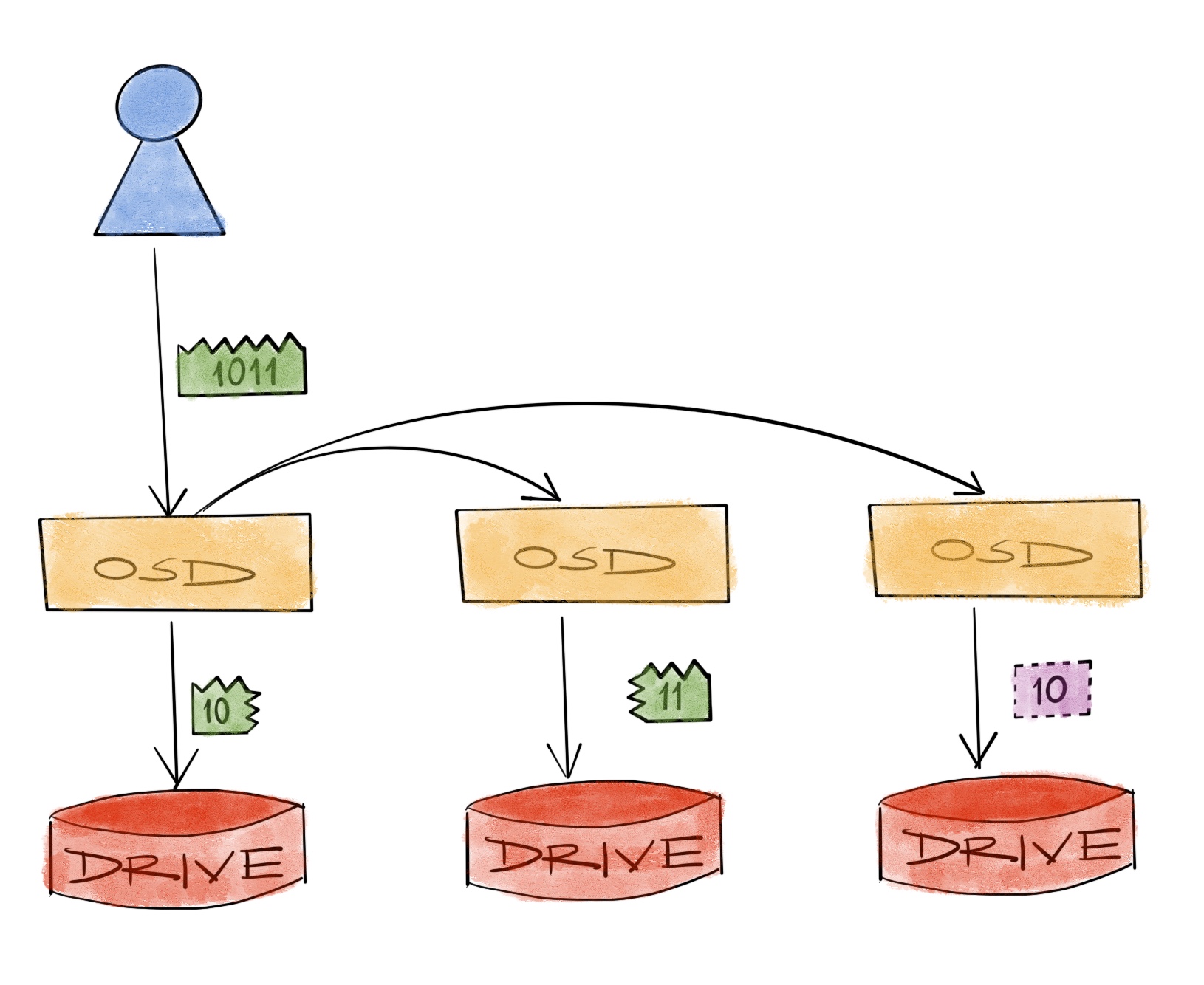

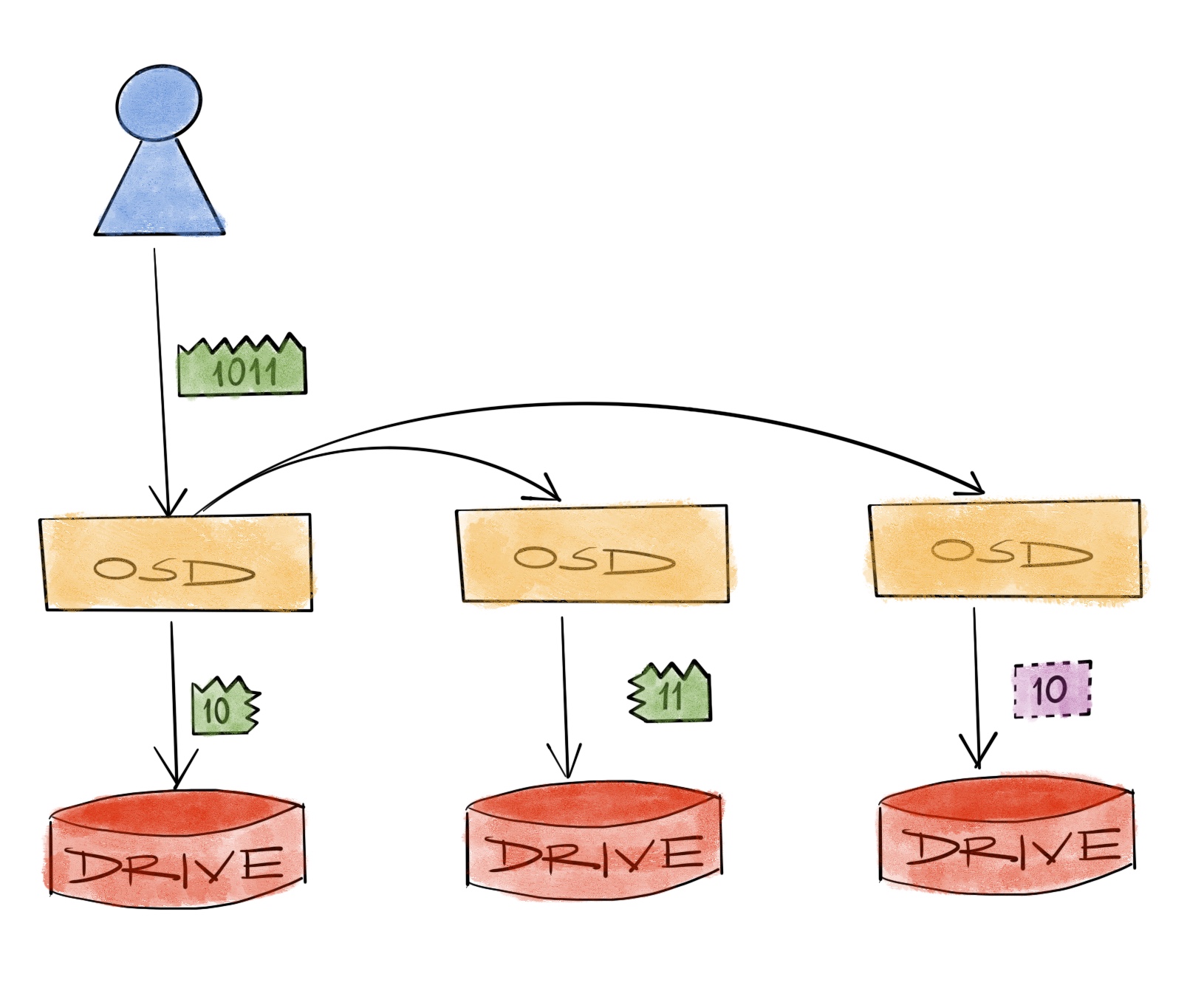

Costs and benefits of erasure coding

Durability is what separates a data storage system from simply using an external hard drive, a USB stick or a smartphone. When one of those simpler storage solutions fails, it’s safe to say that the data is toast. When we don’t want the data to be toast when things drop out of our pockets, we back them up to a more secure place like “the cloud”. We do that because we cannot reliably stop things falling out of our pockets no matter what we do.

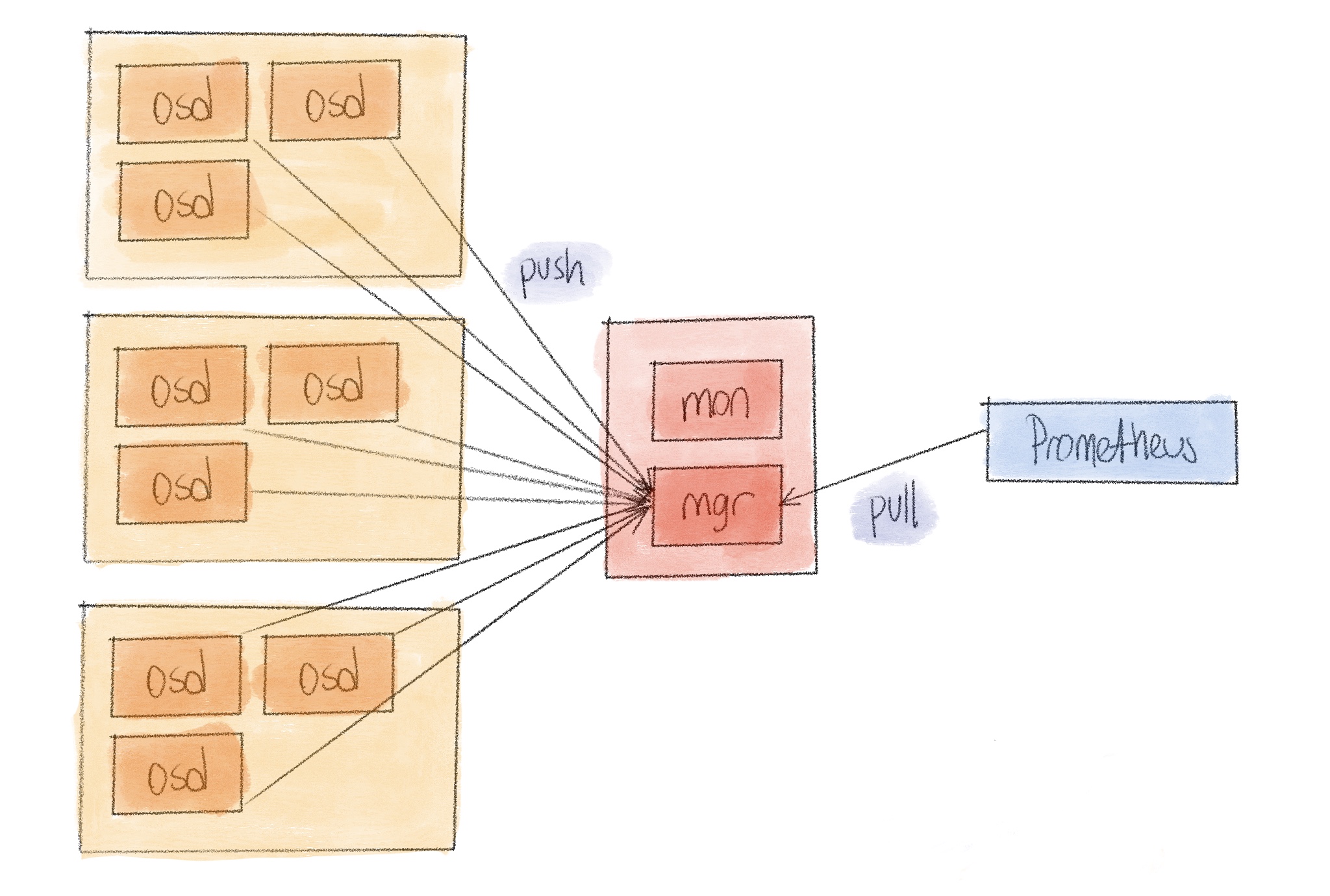

Distributed ceph monitoring

Like any distributed system, reliability of ceph operations largely depends on the available monitoring. Over the years we have seen and deployed many solutions ranging from zabbix to opentsdb to others for the same purpose. Nowadays our general choice is around Prometheus and that goes for ceph monitoring as well.

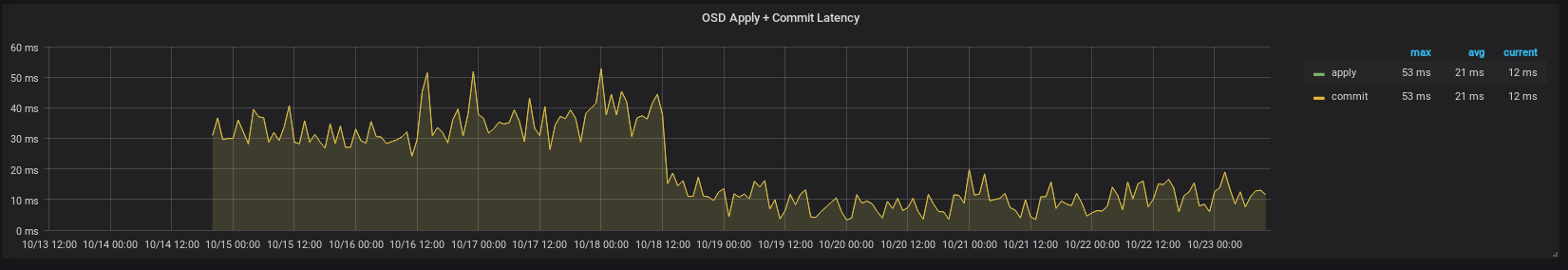

A journey through the write caches

As is customary for any hardware, we had to test our new drives in one of our latest deployments, especially how they are going to behave w.r.t. write caching. This specific deployment had RAID controllers set in JBOD mode, exposing all drives directly to the operating system, which we use as bluestore ceph osds.

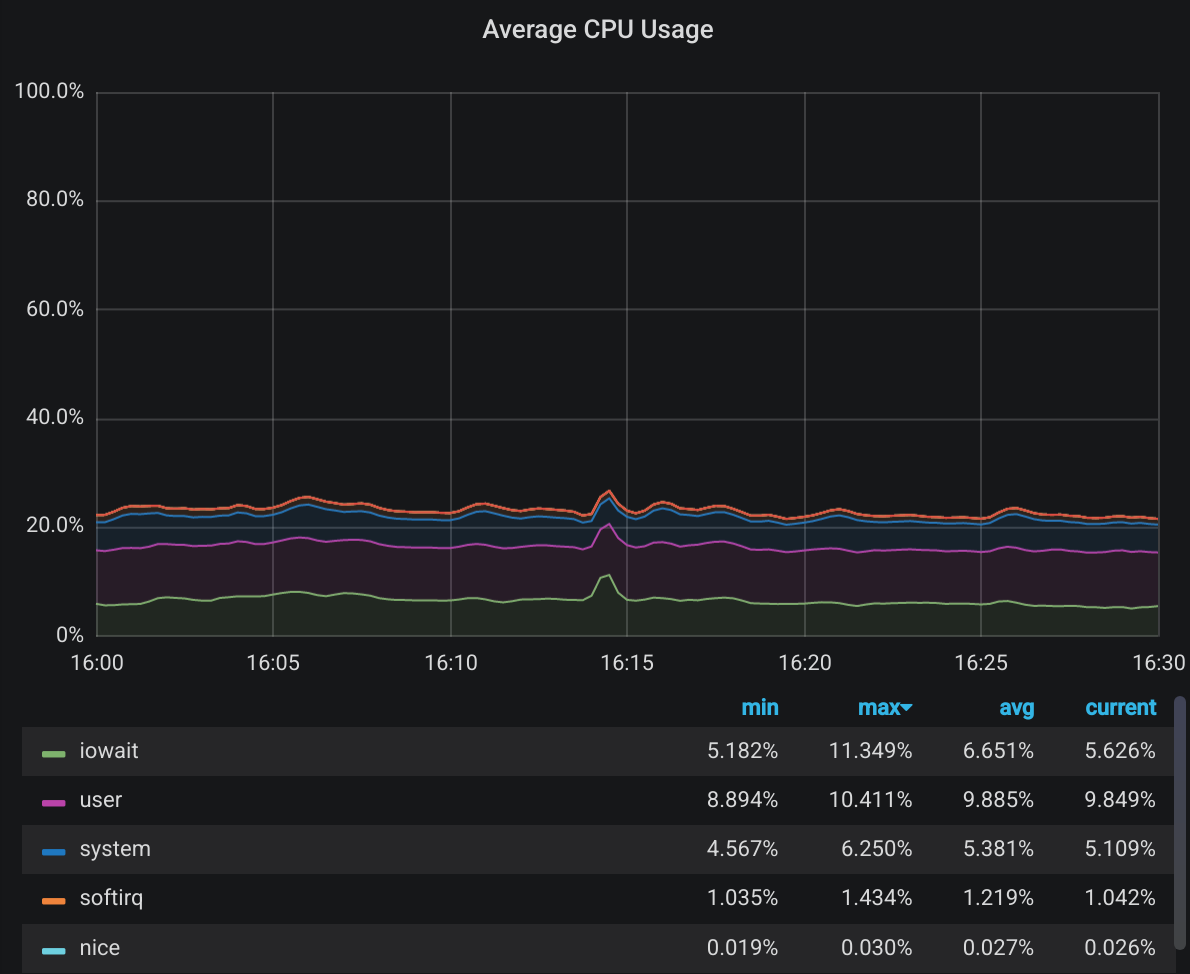

Heaviness of large ceph clusters

Data capacity is the first thing that comes to mind while talking about large ceph clusters, or data storage systems in general. The number of drives is another measure to think about. And sometimes maximum iops is something to look out for, especially while considering a full-flash / nvme cluster. But heaviness? What does that even mean?